I delivered Remember The Rubber Hose, a talk on privacy and distributed applications, at DappCon Berlin 2018. Skipping the introduction and going straight to the beef…

Common knowledge

Now, hopefully, everything I’m going to say here will be obvious to you. Best case scenario, I’m preaching to the choir.

Let us put aside for a moment the topic of decentralization and distributed apps at large.

Have you ever tried to figure out what your potential users know about decentralized platforms?

Even just asking about ledgers. Say, what’s the difference between Bitcoin and Ethereum.

I’m not just talking average users on the street. I mean even tech people who are working on other areas.

Most of them don’t know. Tech people who are not into the space might know that Ethereum lets you do smart contracts, maybe they’ve heard it has a Turing-complete virtual machine, but not a lot beyond that.

People do know a few things, though. Show of hands. Please raise your hand if you’ve heard one of these before.

- The “crypto” comes from all the data out there being encrypted…

- Blockchains are private because they are decentralized…

- And this makes them anonymous and untraceable.

Yes, these are misconceptions.

Yes, users should know what a tool is for before they use it.

But I think we are partly to blame for these misconceptions.

We talk a lot about putting people in control of their data and giving them back their privacy, yet we don’t tell them what that actually means.

Then this conflates with the misunderstandings they read about online and it all mixes up in their heads into some ideal image.

They end up thinking these tools are something protean which do everything they want them to and then some, with no trade-offs.

This is compounded by the fact that this is a new ecosystem and these are pretty complex tools we’re talking about.

There’s different concepts users need to interiorize: consensus, decentralized storage, governance models, identity management, proof of whatevers, and what the hell is a snark even?

Hey, I’m working in tech and actively involved in the space, and a chunk of the time I spent planning this talk went into checking my facts, since things change so quickly.

Signaling

For instance, it was encouraging to confirm that Vitalik Buterin is changing his stance on privacy. He wrote on reddit that he saw it “as a way to prevent signalling concerns from encompassing all of our activity”.

And, well, he’s not wrong.

If we’re transacting money or collectibles or anything on a public blockchain which doesn’t support anonymous transactions… it’ll be public by definition.

There’s a non-trivial amount of signaling involved in anything one does in public.

What I suspect, however, is that the type of signaling he’s concerned about is very much a first-world problem.

For us, here, in a well-run country with a well-functioning society, signaling is only an issue of social mechanics. It’s something you can choose to disregard, or work around, or consciously avoid.

It’s going to take some mental bandwidth, maybe fending off an Internet mob, but that’s pretty much it.

On the blockchain

Now, every so often you hear someone describe what they are doing as we are putting X on the blockchain.

The main thing that disregards is that blockchains are logs. Logs are very useful, for some contexts.

Yet on these cases the plan is to store this or that set of (often very sensitive) personal data on a blockchain, out there, to be logged and replicated across a vast network until the heat death of the universe.

This is considered generally safe because it’ll be encrypted to a key only the end user has.

This comes with a kind fundamental assumption.

Signaling, Gone Bad

Show of hands again:

- Who believes in keeping control of their private keys?

- Who expects public key cryptography will keep our data safe from attackers at least until someone cracks the quantum computer issue?

- Who has ever had a gun to their heads?

That last one… that’s where signaling concerns me.

In a lot of places, sending the wrong signal can get you in trouble, or become an issue of life and death.

It doesn’t even need to be a rogue state. Just regular criminals with express kidnappings and armed robberies will do.

Because a common justification for doing what we do is because it’ll make the world a better place.

It’s going to improve people’s lives.

And that’s something for which pseudonymity is not enough.

Pseudonymity is nice. It has a use. But the unfortunate thing is that it only needs to be broken once. And if you are pseudonymous, you are trusting the operational security of every individual and system you interact with.

Suppose you get outed as a crypto millionaire.

If you live in a safe country, with little violent crime, what’s the worst thing that can happen? You get put in the wrong tax bracket? Your neighbor figures it out and considers you one of the bourgeois?

If these are the main concerns, then all those other features - the lack of reliance on outside institutions, the censorship resistance, the pseudonymity - they start sliding away from fundamental features and inching closer to nice-to-haves.

The people who can truly benefit from decentralized, censorship-resistant technology are also likely to be the ones in countries where every bit of information out there can cause problems for them, or even put them in danger.

Those of us who have libertarian inclinations make the mistake of thinking of potential attackers as malicious state actors. The oppressive regime scenario.

That’s a threat vector. It happens. It’s happened recently, even. We’ve had state actors confiscate citizens’ equipment, which they knew these people had because of metadata: power consumption.

When I bring that scenario up, people’s usual reaction is “they get my equipment, but they don’t get my passphrase, so I still have my crypto and data”.

Which sounds about right at first blush.

But if anyone here is thinking that, well, you probably didn’t raise your hand when I asked if you’d ever had a gun to your head.

Rubber hose cryptanalysis

Who has heard of rubber hose cryptanalysis?

For those of you who haven’t: Rubber hose cryptanalysis is an easy, cheap, and almost infallible way to break a cyphertext. It only has two steps.

- You find someone who knows the password,

- And then you beat them with a rubber hose until they give it to you

That’s all it takes.

Unless you’re building cryptographic primitives, you may need to adjust your threat model.

An attacker here is not Emily the Eavesdropper or Gustav Government.

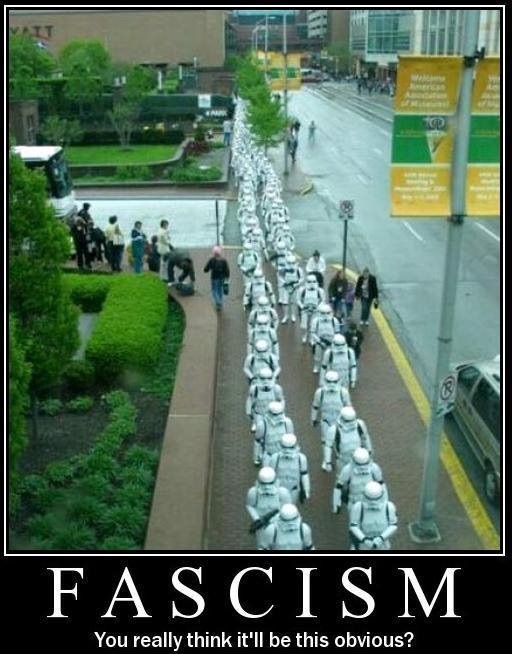

We’re talking about something a lot more like this.

If this guy wants to get information, your user will give it to him.

We need to make it harder for these criminals to find their victims. We need to make it easier for people to be private.

Making the world a better place

Which brings us back to what we were talking about making the world a better place.

If privacy is only a secondary concern, you might be improving things, here, in the first world.

Here, our main concern is to get from A to B quickly enough without wondering how long it’ll take for a cab to show up.

Or sharing our vacation pictures easily with our folks without feeding the photos into some massive, centralized ad-serving maw.

Here, these efforts are making our world better.

Let’s face it.

If you look at it world-wide, we belong to a small group.

We speak multiple languages, are technologically literate, and can afford to take a couple days off just to hear other people speak about an obscure topic.

Compared to the majority that could really benefit from these empowering tools, we are privileged.

And look, I’m not going to be a hypocrite here. If these improvements make our life better, I’ll take them. I’m lucky enough to be in that particular demographic right now.

But if this is our target audience… then we don’t get to talk about making the world a better place.

Because in the unstable spots, the places that are still in development, the areas where people could use a leg up the most, the lack of privacy is going to hold them back.

Think of the users

Defaults

This is about providing sane defaults that give your users more privacy.

Users can change your settings. Sure.

Assuming they find the settings, and research what the difference is, and are not afraid to tinker with the environment for fear of losing their data.

There was this Guardian article a few years ago which summarized several tests and shed some light on how often do people customize their tools.

I’ll save you the time. It didn’t matter if it was about changing the default maps application or the configuration for Microsoft Word, they were fairly consistent.

Between 90% and 95% of users never changed a single default setting.

If your defaults aren’t private, they are going to stay not private.

Mind the metadata

Another way in which you can help your users is by minding the metadata. For instance, can you transfer data on a P2P manner, which doesn’t necessarily need to go to the net at large?

Power consumption, as I mentioned earlier, is another form of metadata. In Germany, for that to be a problem, you’d probably need either the power company or the government to turn against you.

But in a lot of places, power consumption information is easily available. The data is still manually collected, so the meters are exposed to the street, for anyone to take notes as they walk by.

I know the massive power consumption of Proof of Work is a concern for some people from the sustainability standpoint, but for me, it’s also a privacy issue.

Plausible deniability

Maybe you can’t fully obscure the metadata, or there’s no way you can figure out to make your users completely anonymous.

In that case, you may be able to provide a feature which gives them some plausible deniability. An example here are the hidden volumes Veracrypt supports, which co-exist with a main, visible volume. If you are forced to decrypt a file, you could just unlock the main volume, and nobody would know the hidden volume was there.

That way, if one of your users is attacked, they can show the attackers a fake, almost empty wallet.

Speak plainly

But mostly, speak plainly to users. Explain things with clarity. Specially trade-offs, because most of the time, it looks like we just want to talk about features.

Your users need to be able to understand what your solution can and cannot do. They need to get as clear an overview as possible because, once they’ve built a mental model of your tool, they aren’t very likely to change it.

You want them to get this model right.

The wave

It feels like we’re on the cusp of something here. All over the space, execution is improving.

Any day now someone’s going to figure out enough usability issues and decentralization will pour into the mainstream.

When this happens, users will flood in. They’ll start poking around and tinkering with what you have given them. Most of them will keep going with the first platform they get used to.

And then 95% of them will never change the defaults.

So try to make choices which lead to those defaults being as private as you can make them.

And whatever else, remember that once this explodes into the mainstream, the main threat to the majority of your users is not going to be these guys

but this